We saw decision trees for the first time in the very first lectures, and we’ve seen them a few more times since. But we never actually discussed how to train them. In this lecture, we’ll look at the details of that.

Decision trees by themselves are not a very popular model in modern machine learning. They are quick to train, but they can be prone to overfitting, and regularizing them hurts performance a lot.

The main setting in which trees are used are in ensembles, a model that combines a lot of other models in order to arrive at a prediction. Spefically, the method of gradient boosted decision trees, is a very popular approach for achieving high performance with relatively little effort. In this lecture, we will build this picture up step by step: we will first discuss decision and regression trees in the first two videos, and then different ways to create model ensembles in the last two videos.

Decision trees in their simplest form work on data with categorical features. We’ll use this dataset as a running example: each instance (row) is a movie, and the target class is to predict whether a movie won an oscar, was merely nominated, or overlooked.

This is what a trained decision tree might look like. Each internal node asks the value of a particular feature, and sends the instance to one of its children depending on the value of that feature.

To classifiy an instance by this decision tree, we start at the root (the node at the top), and work our way down by answering the question in the node.

If we see a movie with a G rating, the genre drama, and a 2.39:1 aspect ratio, we follow the tree to the highlighted leaf node and label the example as won (i.e we predict that this movie will win an oscar).

So, given the space of all possible trees, how do we find one that fits our data well?

This is how the basic algorithm is set up. We start with an empty tree, and add one node at a time. We don’t backtrack: once a node is added it stays in the tree, and we keep adding nodes until we can add no more.

The node we add at any given moment, is the node that creates the least uniform distribution on the classes, after the split. Let’s look at an example to see how this works.

Here, we’ve plotted our data for two features (ignoring the third for the moment). We’re choosing the root node of our tree

If we split by rating, we get three segments (the three rows on the right). Tallying up the proportions of each class, we see that the proportions of the segments are not that different from the proportions in the whole. In other words, knowing the value of the rating doesn’t change the information we have about the class very much.

On the other hand, if we split by genre we see that the resulting distributions are much more different: knowing that a movie has the genre scifi, allows us to say with near certainty that it won’t win an oscar.

If we split by genre and then by rating, we get this segmentation of the instance space. Each of these regions, corresponding to a leaf node is called a segment.

We choose a separate split for each node we extend. For each of the three children of the genre node, we may choose different features to split on.

There’s no use (with categorical features) in splitting on the same feature twice. Every instance that encounters the lower genre node will have the yellow genre, so we’re not splitting the data at all.

The maximum depth is an optional hyperparameter. We can also train without a maximum depth and rely only on the other two stop conditions.

In order to make this into a proper algorithm we need to make this more precise. The best feature to split on is the one that creates (averaged over all child nodes) the most non-uniform class distribution in the resulting segment.

How do we measure the non-uniformity of a distribution? It’s straightforward for two classes (the further from 50/50 the less uniform), but for more than two classes, it’s not so clear cut. Here we see two distributions. In the first, the proportion of the red class is bigger than in the second, but in the second the remainder is divided up between blue and orange in a more non uniform way. Which of these two distributions is less uniform?

To answer this, we need to look back to the first probability lecture, where we encountered entropy.

The more uniform our distribution is (the more unsure we are) the higher the entropy. This kind of uniformity over the class distribution is what we are trying to achieve in our splits.

So we can use entropy to establish how uneven a class distribution is, and the more uneven, the better we like the split. But to evaluate this split here, we need to look at four different distributions: the three after the split and the one before (remember, we are evaluating all possible splits over the whole tree, so the incoming distribution may differ between candidates).

To apply this to the multiple children that a split creates, we can use conditional entropy. Conditional entropy is just the entropy of a conditional distribution, summed over all values of the conditional, weighted by the marginal probability of that value.

The information gain measures how much knowing the value of G decreases the entropy of O (i.e. increases what we know about O).

In practice that gives us this formula, for the information gain of a split. If the set of instances before the split is S, and the split gives us subsets Si, the information gain is the entropy of S minus the sum of the entropies of the split sets, each weighted by the proportion of instances of S contained in Si.

When we compute the entropy of a set like S, we just use the relative frequencies to estimate the probabilities. For instance, we estimate the probability of the red class in S as 23/(23+12+11).

If our dataset contains numeric features, we can deal with this by choosing a threshold t. The node splitting on a numeric features splits the segment in two: the instance for which the feature is lower than t go to one child, and the instances for which the features is higher go to the other.

To compute the optimal threshold we only need to look at numeric values halfway between two instances with a different class. We compute the information gain for each and choose the threshold which provides the highest information gain.

We saw a classifier with numeric features in the opening lecture.

When training an actual decision tree on these two numeric features, we saw quite a complex decision boundary emerge.

This is possible with only two features because with numeric features it does make sense to split twice on the same feature; we just have to split on a different threshold each time.

Which brings us to the problem of overfitting. The larger the tree grows, the more likely it is to overfit the data. For this plot, the size of the tree is limited to a particular maximum. As the maximum grows, the training accuracy increases, but the test accuracy decreases. A clear sign that the model is overfitting.

To reduce overfitting, we can prune a tree.

After training the full tree, we work backwards from the leaves. For each leaf, we check (on withheld data) whether the tree classifier better with the leaf or without. If it works better without, we remove the node.We keep pruning leaves until the performance stops improving.

It’s important to note that when we use a validation set to guide search, that we are using validation data to select our model. This means that if we are also using a validation set, for instance to select whether we’ll use a kNN classifier or a decision tree, the pruning should happen on a withheld part of the training data, and not on the same validation data that we use to do our hyperparameter selection.

To see why, imagine what happens when we train our final model (supposing that we’ve selected a decision tree). During training, we can’t use the test set to do our pruning. We can only see the test set when we’ve decided what our final model is going to be. The first train/validation split should simulate this situation, so we can’t use the orange validation set for controlling search.

We’ve seen classification trees that use numerical features, but what if the target label is numerical? In this case, the model is called a regression tree.

We can label the leaves with the mean or the median of the training instances in the resulting segment.

We can’t compute entropy over the target values because most likely, they’ll all be different. However variance measures a very similar property: the bigger the spread in the set of output labels, the less certain we are about what the value of the leaf node should be. The best split results in a large reduction of average variance over the created segments.

As we saw in the first lecture, here’s what a regression tree looks like over one numeric feature.

And here’s what it looks like for two numeric features.

source: http://healthcare-economist.com/2015/11/16/what-are-regression-trees/

Tree models are a classic example of a model class that provides a generalization hierarchy. At one end, the model class provides both very simple, low capacity models like constant models, which output just one value for all instances (i.e. a tree without splits) and stumps, models that make just one split. These are low capacity models with high bias and low variance, that generalize a lot.

At the other end are full-depth tree models, which are very likely to memorise irrelevant details of the data, and overfit a lot.

Single decision trees are not very popular any more. To make them effective, we need to train many of them and combine them into a single model. These are called decision or regression forests, and they’re an example of a model ensemble, which we’ll discuss in the next part.

Ensembling is the business of combining multiple models into one. The hope is that this gives you a model that is more than the sum of its parts.

Your collection of models is called the ensemble. In the simplest form of ensembling, each model in the ensemble can be trained in isolation as you would normally train it.

After you train a bunch of separate models, you need to somehow combine their predictions. The simplest approach in classification is to take a majority vote among the ensemble. The simplest approach in regression is to average the outputs. You can also take a weighted vote, perhaps giving greater weight to the predictions of models that have greater confidence, or models that perform better on a held out validation set.

A more complex approach is stacking.

Imagine that we have a simple dataset, that we train three models on. These could be any three models. For instance, the three different models we saw in the first lecture, three neural networks, with different initializations, or three kNN models with different values of k. Each of them gives us a prediction for every instance in our dataset.

The idea of stacking is that we treat these predictions as features themselves, for another classifier. The simplest way to think of this is as adding these predictions to our dataset as columns.

We then train a new model, called a combiner, on this new, extended data. The combiner can choose to how to combine the “expert advice” of the original models, and it can even use the original features to learn which expert to listen to in which part of the feature space.

In general, the combiner is a simple, low-variance model like a logistic regression.

If we use stacking in combination with differentiable models like neural nets, then the stacked model is also fully differentiable, and can be finetuned end-to-end by backpropagation.

That is all very ad-hoc. To get a better handle on exactly what we’re trying to achieve with ensembling, we need to look back to bias and variance.

Here is a little visual reminder.

If we have many darts hitting close together, but far away from the bulls-eye, we have high bias. If the darts are spread out, but their average is the bulls-eye, we have high variance. It’s important to remember that in this analogy sampling a dataset and training a model together counts as one dart. To get a second dart, we need a new dataset to train a new model on. That’s not usually a luxury we have, so normally we can’t be exactly sure whether our error is due to high bias or high variance, but with tricks like resampling the data, we can often get a pretty good idea.

image source: http://scott.fortmann-roe.com/docs/BiasVariance.html

We’ll start with learners, a model together with its search algorithm, that have high variance. We also call these unstable learners. These may get a good performance, but slight perturbations in the data can throw that performance off.

These are the kinds of models that tend to show overfitting, like kNN regression with a low k values, or a regression tree with no regularization. Bias and variance are only precisely defined for regression problems, but the basic intuition carries over to classification. An unstable learner is one that tends to overfit.

In lecture Methodology 2, we saw a method for simulating the sampling of multiple datasets form the source of our original data: bootstrapping. We can use bootstrapping both to get an idea of our bias/variance tradeoff and as a way to help us build an ensemble.

Before we do that, however, lets’ see why bootstrapping works so well. It’s more than just an intuitive trick. We can make precise exactly how it approximates our data distribution.

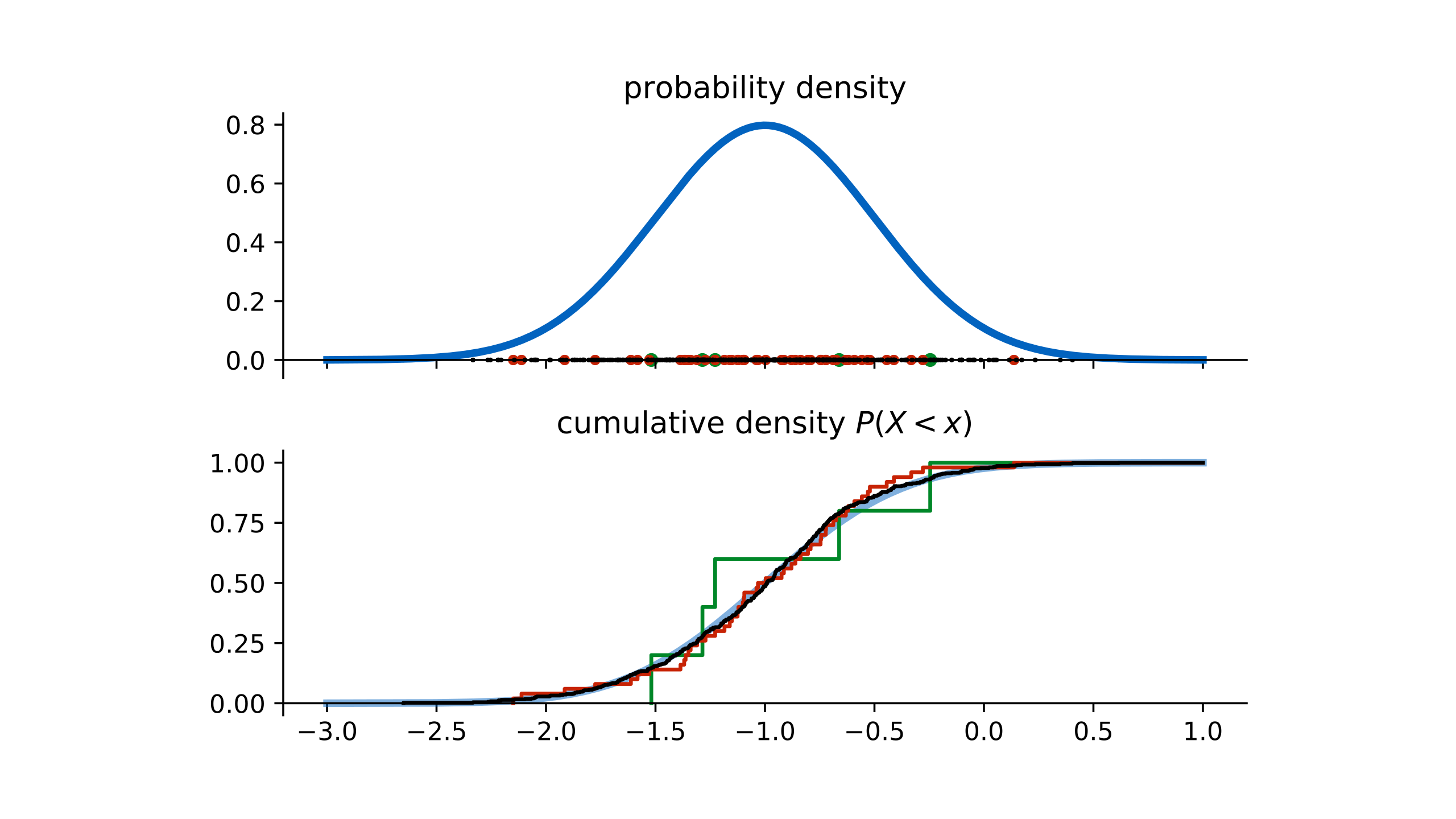

To make this clear, we’ll imagine that we’re sampling single scalars from a normal distribution.

In this case, we can look at the cumulative density function (CDF). This tells us the probability of sampling a point below x. Note that this function returns a probability, not a density. It always groes from 0 to 1 over the domain of the probability distribution. Both the cumulative density function and the probability desnity function uniquely determine the probability distribution.

If we sample 5 points from the original normal distribution, and then re-sample one point from that dataset, we are essentially sampling from the green CDF. This is called the empirical distribution: the distribution we get by resampling one point in our dataset.

If we increase the size of the original data (to 50 points), we see that the empirical CDF becomes a better approximation of the true CDF

At 500 points, the empirical CDF and the original CDF are almost indistinguishable. This is why bootstrapping is often preferred over other resampling methods like cross validation. In this was we can show that a bootstrapped sample from a large dataset mimics the original data distribution.

This can help us to measure the bias and variance of a learner. It can also help us to build an ensemble.

This is called bootstrap aggregating, or bagging for short. We don’t change the way we train the models in our ensemble, we just resample the data by bootstrapping.

This is most often done with classifiers. In that case, after the ensemble is trained, we simply take a majority vote to get the ensemble prediction. If we want class probabilities, we can use the relative frequencies of each class among the predictions.

Here’s a simple example. We train a set of linear classifiers on bootstrap samples of our data. Each produces a slightly different linear decision boundary (indicates by a dotted line). We now build an ensemble that looks at what each of these classifiers says, and picks the majority class among those predictions. This gives us a piecewise linear decision boundary: every time two decision boundaries in the ensemble cross, the majority changes. So long as they don’t cross, we end up following one of the original linear decision boundaries.

source: adapted from Machine Learning by Peter Flach, figure 11.1

One simple instantiation of bagging is the random forest. Here, we train a boostrapped ensemble of decision trees and for each we subsample both the instances, and the features we include (both the rowas and the columns of the data matrix).

In the previous video, we saw the ensembling method of bagging in action.

Bagging helps for models with high variance and low bias (models that tend to overfit). If we have the opposite, a learner with high bias and low variance, can we achieve the same? This is the hypothesis boosting question , where hypothesis is a synonym for a learner or model. Is there an ensembling method that allows us to create a series of models from a family with high bias, like linear models or decision stumps, and to create an ensemble that together has low bias (possibly at the expense of a higher variance).

In other words, can we turn an underfitting model into an overfitting model by ensembling? Can we, for instance, put lots of linear classifiers together to draw complex, highly nonlinear shapes.

The key to achieve this is to train a series of models, one after the other. Contrast this with bagging, where the models could be trained in parallel. The idea of boosting is that in each subsequent model, we look at what the previous models did to change what our next model is going to do. Specifically, we look at which instances the previous models got wrong.

Most boosting methods work by adding a weight to each instance in the data.

For each new model, we lower the weights of the points that the previous models got right, and increase the weight of the points that the previous models got wrong. We then train the next model to focus on this reweighed version of the data.

Here is a generic boosting algorithm. The idea is that we train an ensemble of k classifiers. We start with a single model m0, and we add a classifier to the ensemble one at a time. At each step, we see how the current ensemble does, check which instances it gets wrong, and train the next model to focus on those (by increasing their weight). We then assign the new classifier mt a weight at which determined how big a role it plays in the ensemble, and we add it. At each step t the classification the ensemble makes is a weighted sum over all the classifiers we’ve trained so far.

To turn this idea into a proper algorithm, we need to make a few choices. For instance:

How do we assign the weights to the dataset based on the current ensemble performance? We know that mistakes should lead to higher weights, but what function exactly should we use?

How do we train a model on weighted data?

Once we’ve trained a model, how much should its weight at in the ensemble be? It makes sens to make the weight higher if the model performs better, but what exactly should the function be?

Let’s start with this question: how do we train on a weighted dataset? If we have a loss function, we can just make the sum over the loss of each instance a weighted sum. We saw examples of weighted maximum likelihood loss in the lecture on density estimation.

If our model isn’t trained by an explicit loss function, like for instance the tree models we saw in the first two parts of this lecture, we can instead resample the dataset, and make the instance with higher weights more likely to be sampled.

Next, we need to decide how to set the instance weights wi and how to set the model weights at.

We’ll first take a look at AdaBoost (which stands for adaptive boosting), which is a principled derivation for how to set these.

We assume we’ve already trained the ensemble up to model mt-1, giving us ensemble model Mt-1. We now need to make two choices: which model mt to choose (or what loss to minimize when training this model) and which model weight at to assign it.

To do so, we first define the error (aka the loss) for the ensemble at step t, and then choose mt and at to minimize this loss. This is a slightly unusual loss, compared to what we’ve seen so far. We assume that the ensemble produces a real value (anywhere between negative and positive infinity) and that its sign corresponds to the predicted class.

We then multiply this by the true sign yi to get a value that is large and positive if the model assign the correct sign and a value that is large in magnitude. The loss is the exponential of the negative of this value. If the ensemble gets the class right, it ends up to the left in the above graph, and if it gets it wrong it end up to the right. We sum this loss over all instances to get the total loss at time t.

From this loss we can derive an analytical answer to our questions of what the new instance weights should be and what the model weight should be.

We can rewrite the per-instance loss to separate the error cause by the ensemble so far, and the loss caused by our choice of model mt. The first is a constant (since the ensemble up to mt-1 has already been chosen), which becomes the weight wi of instance xi.

For the total loss, we can split the sum into the instance that are correctly classified and the instances that are incorrectly classified by the new classifier mt. This means the exponents become constants in the sum and can be move to the front.

Line five is not an equal function, but minimising line four is the same as minimising line 5. This follows by multiplying the whole quantity to be minimized by the constant exp(-at). The result is that the multiplier of the first term becomes 1 and the multiplier of the second term becomes exp(2at)

In the last line, all the greyed out parts are constant with respect to mt: Wc and Wi each depend on which classifier we choose, but their sum is just the sum of all the weights provided by the ensemble so far.

In the last line, we add Wi to the first term and subtract it from the second.

The take-away here is that choosing mt to minimize the error Et, consists of just minimising the sum of the weights of the instances misclassified by mt.

To choose at, we just compute the derivative of the error with respect to to at, set it to zero and solve for at.

Intuitively, the formula for at states that the better the proportion of correct to incorrect labelings, the more model t should weigh in the ensemble. The logarithm ensures a kind of diminishing return of weight: getting 11 instances correct instead of 10 has much more impact on the weight than getting 101 instances correct instead of 100.

If we visualise the learners, we can see clearly why boosting is so much more powerful than bagging. Since bagging works in parallel, every member of the ensemble will end up looking roughly the same, providing little variation in the ensemble. If we start with underfitting classifiers (like linear ones), the ensemble decision boundary doesn’t look much different from the individual ones.

In boosting, since each learner is trained in sequence, based on what the previous learners did, we get much more variation, giving us a combined decision boundary that can be much more powerful than what the original decision boundaries looked like.

Adapted from Machine Learning by Peter Flach, figure 11.2

When we want to boost regression models, we can look to gradient boosting. The idea here is that we don’t reweight the dataset, but instead, we look at the residuals of the ensemble to far, and try to train a model to predict those. If we can keep doing this succesfully, we can eventually subtract all residuals to get a perfect prediction.

To illustrate, let’s start with a constant model. We’ll minimize squared error, so the optimal constant is the mean of the data.

We take the residuals of the previous model, and train a new model, m1, to predict the residuals. The new ensemble model adds these predictions to the predictions of the first.

This ensemble model has new residuals, which we can then train another model m2 to predict, add that to the model. and so on.

This is the algorithm in detail. We start with an ensemble model consisting of only a constant predictor. At each iteration, we compute the residuals for the current ensemble model, and add a predictor for the residuals to the ensemble. The new model is added with a weight gamma, which helps us if the new model happens to fit the residuals poorly.

In contrast to adaboost, there is no principled way to set the weights. We simply search for a good value by optimization, or slowly decay the weights.

To see why we call it gradient boosting, imagine a model which simply stores a single value wi which is the value it will predict for instance xi. This is a perfectly overfitting model, of course, and we cannot expect it to learn anything, but we can think of its negative gradient as a kind of ideal direction for where we want a more realistic model to go. If we could control all the outputs of our model for all instances individually, we would want to follow this negative gradient to minimize the loss.

We can’t, of course, in a realistic model, changing one parameter changes multiple predictions at the same time, but the ideally, this is the gradient on our “output space” that we want to approaximate.

If our loss function is the mean squared error loss, then the gradient for this model are the residuals

In other words, the residuals are the gradient of the model loss for instance i with respect to the output for instance i.

By adding the residuals to the previous model, we are performing a kind of gradient descent for models that don’t support it (like regression trees). We are training a model to predict where we’d like to be after one step of gradient descent, and adding that model to our ensemble. the ensemble is following the approximate gradient in output space.

Compare this to what we saw in backpropagation. There, we also work out the derivative of the loss with respect to the network output y (we called it a local derivative in this context).

We then took this derivative and backpropagated it down the network to work out derivatives for the weights. We can think of gradient boosting as a way of accomplishing this for models where backpropagation isn’t possible. Instead, we train a model to predict the effect of the gradient update step on the output space, and we bolt that model onto our original.

The benefit of this perspective is that it allows us to generalise the idea of gradient descent to other loss functions. We can simply work out this derivative, and train the next model in our ensemble to predict it. We call this a pseudo-residual.

The resulting value isn’t as intuitive as a proper residual, but training the next model to predict the pseudo residuals works just as well to minimize the loss.

For instance, here is how it works for the mean absolute error loss (also known as the L1 loss): the absolute value of the difference between output and target. As we’ve seen before, minimizing absolute errors instead of squared errors, leads to gradients xomposed of the sign, and the median as a minimizer.

Therefore, if we want to use gradient boosting to minimize the MAE loss, we should train the next model in our ensemble to predict only the sign, -1 or +1, of the residuals of the ensemble so far, and add that to the current predictions.